GPU designs go to great lengths to obtain high efficiency, conveniently reducing the difficulty programmers face when programming graphics applications.

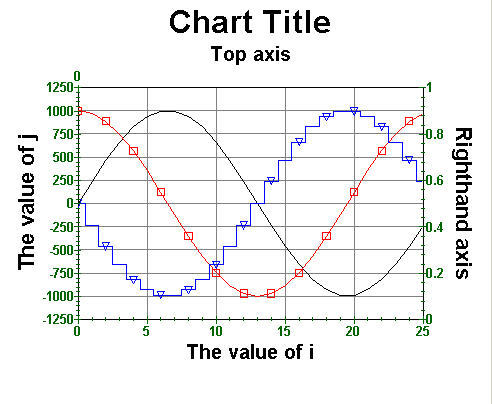

UCSD CPLOT QUEUE SOFTWARE

The key to high performance lies in strategies that hardware components and their corresponding software interfaces use to keep GPU processing resources busy. Despite the inherently parallel nature of graphics, however, efficiently mapping common rendering algorithms onto GPU resources is extremely challenging. Impressive statistics, such as ALU (arithmetic logic unit) counts and peak floating-point rates often emerge during discussions of GPU design. GPUs assemble a large collection of fixed-function and software-programmable processing resources. These platforms, which include GPUs, the STI Cell Broadband Engine, the Sun UltraSPARC T2, and, increasingly, multicore x86 systems from Intel and AMD, differentiate themselves from traditional CPU designs by prioritizing high-throughput processing of many parallel operations over the low-latency execution of a single task. The modern GPU is a versatile processor that constitutes an extreme but compelling point in the growing space of multicore parallel computing architectures. Both of these visual experiences require hundreds of gigaflops of computing performance, a demand met by the GPU (graphics processing unit) present in every consumer PC. Disappointed, the user exits the game and returns to a computer desktop that exhibits the stylish 3D look-and-feel of a modern window manager. Seconds later, the screen fills with a 3D explosion, the result of unseen enemies hiding in physically accurate shadows. KAYVON FATAHALIAN and MIKE HOUSTON, STANFORD UNIVERSITYĪ gamer wanders through a virtual world rendered in near- cinematic detail. GPUs a closer look As the line between GPUs and CPUs begins to blur, it’s important to understand what makes GPUs tick.

0 kommentar(er)

0 kommentar(er)